Introduction to Artificial Neural Networks (ANNs)

Artificial Neural Networks (ANNs) are transforming the way we look at technology and data processing. Imagine a system that learns from experience, just like humans do. This is what ANNs bring to the table—a fascinating blend of biology and computer science designed to mimic how our brains work.

As we dive deeper into this intriguing topic, we’ll explore how these networks operate and why they hold immense potential in various fields. From healthcare diagnostics to self-driving cars, understanding Artificial Neural Networks opens up a world filled with possibilities. So, let’s embark on this journey together and uncover the magic behind these incredible systems!

The Components of ANNs

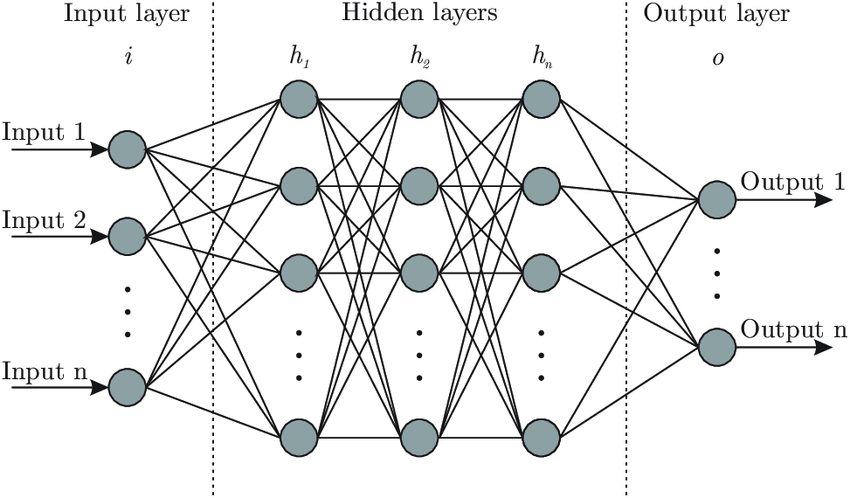

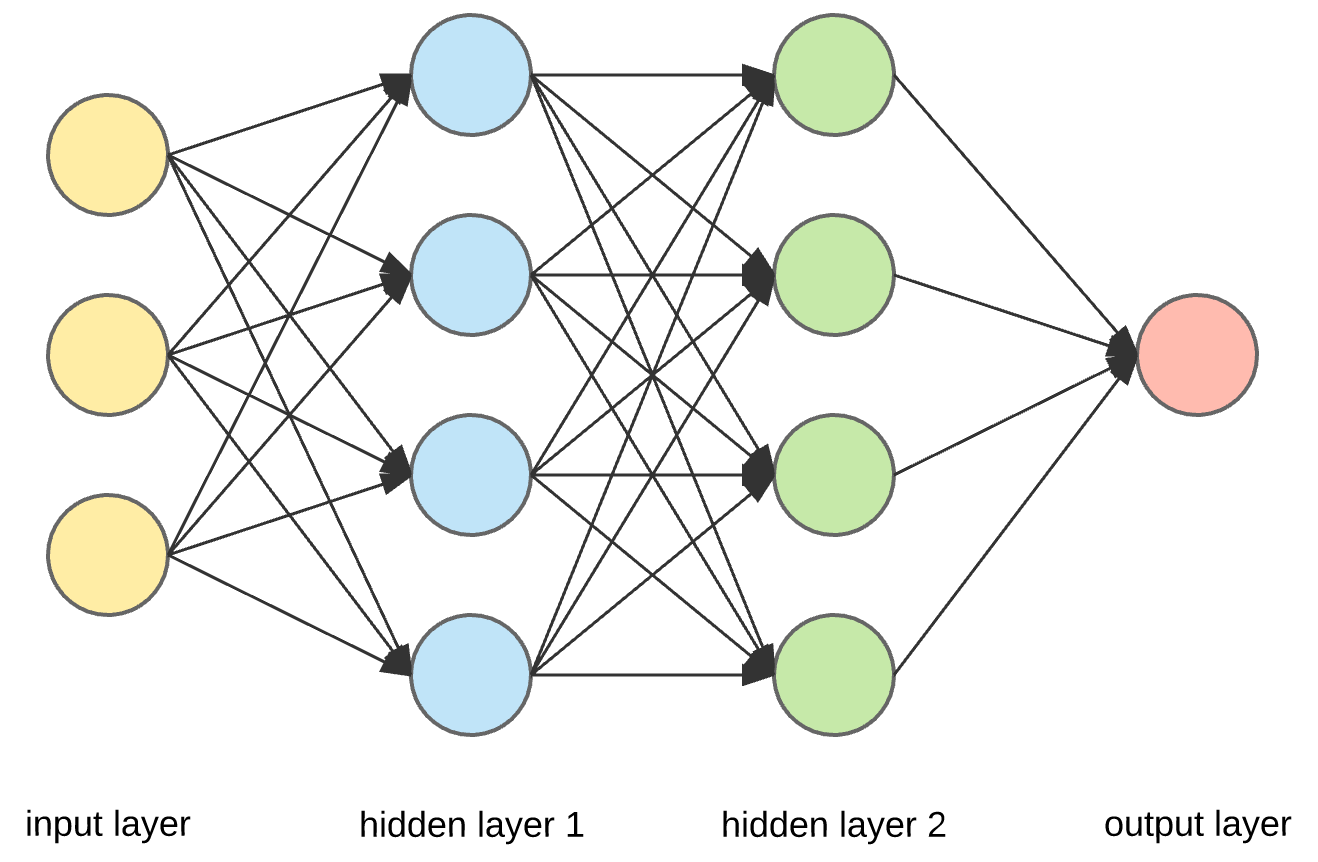

Artificial Neural Networks are built with several key components that mimic the way human brains process information. At their core, nodes or neurons serve as the fundamental units of computation. Each neuron receives inputs, processes them, and produces an output.

Connections between these neurons represent synapses in biological systems. These links have weights that adjust based on learning experiences—essentially how ANNs become smarter over time.

Layers play a crucial role too. Typically, there are three types: input layers gather data; hidden layers perform computations; and output layers deliver results. The depth of these layers influences the network’s capability to learn complex patterns.

Activation functions determine whether a neuron should be activated or not after processing its inputs. They introduce non-linearity into the model, allowing it to solve more intricate problems beyond simple linear relationships.

Types of ANNs

Artificial Neural Networks come in various types, each tailored for specific tasks.

Feedforward Neural Networks are among the simplest. They work by passing data from input to output layers without looping back. This straightforward structure makes them effective for basic functions like classification.

Convolutional Neural Networks (CNNs) excel at image processing and recognition. By using filters, they can detect patterns and features in visual data, making them essential in fields such as computer vision.

Recurrent Neural Networks (RNNs) introduce a unique twist with their ability to process sequences of data. They retain information from previous inputs, which is crucial for applications like language modeling and time series analysis.

Generative Adversarial Networks (GANs) take an innovative approach by involving two networks that compete against each other. One generates content while the other critiques it, leading to high-quality outputs across many creative domains.

Each type serves distinct purposes within the vast landscape of artificial intelligence technology.

Advantages and Applications of ANNs

Artificial Neural Networks (ANNs) offer several advantages that make them invaluable in various fields. Their ability to learn from vast amounts of data allows for high accuracy in tasks like image and speech recognition.

One significant application is in healthcare, where ANNs assist in diagnosing diseases by analyzing medical images with remarkable precision. This capability leads to quicker diagnoses and better patient outcomes.

In finance, ANNs are used for fraud detection, identifying unusual patterns that humans may overlook. They can also predict market trends based on historical data.

Additionally, these networks enhance customer experiences through personalized recommendations on platforms like Netflix or Amazon. By understanding user preferences, they tailor suggestions effectively.

Manufacturing benefits too; ANNs optimize supply chain logistics and improve quality control processes through predictive maintenance strategies. The versatility of Artificial Neural Networks makes them essential across diverse industries today.

Limitations and Challenges of ANNs

Artificial Neural Networks (ANNs) hold great promise, yet they come with significant limitations. One major challenge is their need for large datasets. Training an ANN effectively often requires vast amounts of data to achieve accuracy and generalization.

Another concern is interpretability. ANNs are sometimes dubbed “black boxes” because understanding how they reach specific decisions can be difficult. This lack of transparency raises issues in sectors such as healthcare, where accountability is crucial.

Additionally, overfitting poses a risk during training. If an ANN learns patterns too well from the training data, it may struggle to perform on new information or scenarios.

Computational costs also hinder widespread adoption. Running complex models demands substantial resources and time, making them less accessible for smaller organizations or individual developers seeking efficient solutions.

Biases inherent in the training data can lead to skewed results, highlighting the importance of careful dataset curation when employing these networks.

Training and Improving ANNs

Training artificial neural networks is a complex yet fascinating process. It involves feeding the model vast amounts of data to learn patterns and make predictions. This training is typically done using labeled datasets, where the input data corresponds to known outputs.

Adjusting weights and biases during this phase is crucial. The objective is to minimize error, which refers to the difference between predicted outcomes and actual results. Various algorithms, like backpropagation, play a key role in fine-tuning these parameters.

Moreover, techniques such as dropout help prevent overfitting by randomly disabling certain neurons during training. This promotes generalization across unseen data.

Improving ANNs also requires ongoing evaluation through metrics like accuracy and loss functions. Continuous testing ensures that models remain robust as new information emerges or when applied to different contexts.

Experimentation with architectures can further enhance performance; choosing the right layers can significantly impact learning efficiency.

Future Developments in ANNs

The future of artificial neural networks (ANNs) is brimming with potential. Researchers are exploring more sophisticated architectures that mimic the human brain’s complexity. This could lead to enhanced problem-solving capabilities.

One exciting avenue is neuromorphic computing, which aims to create hardware designed specifically for ANNs. Such advancements promise increased efficiency and speed in processing information.

Moreover, the integration of quantum computing may revolutionize how we train and deploy ANNs. This technology can handle vast datasets at unprecedented speeds, pushing limits previously thought unattainable.

Ethical considerations also play a crucial role in future developments. As ANNs become more autonomous, guidelines will be essential to ensure responsible usage and mitigate bias.

In fields like healthcare, finance, and climate science, improved predictive models powered by advanced ANNs could transform decision-making processes significantly. The landscape is evolving rapidly; staying engaged with these changes is vital for anyone interested in artificial intelligence.

Conclusion

Artificial Neural Networks (ANNs) have become a cornerstone of modern artificial intelligence. Their ability to mimic human brain functions allows them to solve complex problems in various fields. From healthcare and finance to entertainment, the applications are vast and transformative.

The components that make up ANNs—neurons, layers, and activation functions—work together seamlessly to process information. Different types of networks cater to specific needs, whether it’s feedforward networks for straightforward tasks or recurrent networks for sequential data analysis.

Despite their many advantages, such as adaptability and efficiency, ANNs do face limitations. Challenges like overfitting and interpretability need addressing as the technology evolves. The training process is crucial; fine-tuning parameters can lead to significant improvements in performance.

Looking ahead, advancements in neural network architectures promise even more robust capabilities. Researchers continue exploring innovative techniques that may enhance learning processes while reducing computational demands.

As our understanding of these artificial structures deepens, so too does their potential impact on society at large. Embracing this technology will be essential as we navigate future developments in AI.